Denying the Climate Catastrophe: 4b. Problems With The Surface Temperature Record

This is the part B of the fourth chapter of an ongoing series. Other parts of the series are here:

- Introduction

- Greenhouse Gas Theory

- Feedbacks

- A) Actual Temperature Data; B) Problems with the Surface Temperature Record (this article)

- Attribution of Past Warming; A) Arguments for it being Man-Made; B) Natural Attribution

- Climate Models vs. Actual Temperatures

- Are We Already Seeing Climate Change

- The Lukewarmer Middle Ground

- A Low-Cost Insurance Policy

In part A of this chapter, we showed that the world had indeed warmed over the past 30-100 years, whether you looked at the surface temperature record or the satellite record. Using either of these metrics, though, we did not see global warming accelerating, nor did we see warming rates that were faster than predicted. In fact, we saw the opposite.

One story I left out of part A, because it did not affect the basic conclusions we drew, is the criticisms of the surface temperature record. In this part B, we will discuss some of these criticisms, and see why many skeptics believe the 0.8C warming number for the past century is exaggerated. We will also gain some insights as to why the satellite measured warming rates may be closer to the mark than rates determined by surface temperature stations.

Uncorrected Urban Biases

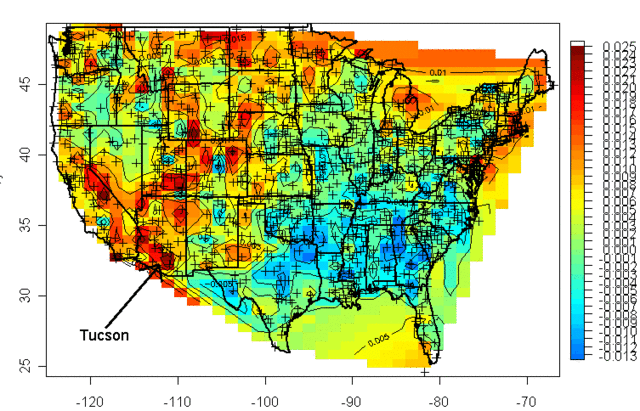

Years ago a guy named Steve McIntyre published a graphical portrayal of warming rates across the US. This is a common chart nowadays. Anyway, this chart (almost 10 years old) drew from temperature measurement stations whose locations are shows with the crosses on the map:

I was living in Arizona at the time and I was interested to learn that the highest warming rate was being recorded at the USHCN station in Tucson (remember, just because Arizona is hot is no reason to necessarily expect it to have high warming rates, they are two different things). At the time, Anthony Watt was just kicking off an initiative to develop quality control data for USHCN stations by having amateurs photograph the sites and upload them to a central data base. I decided I would go down to the Tucson site to experience the highest warming rate myself. This is what I found when I tracked down the station, and took this picture (which has been reproduced all over the place at this point):

That is the temperature station, around that fenced in white box (the uproar over this picture eventually caused this location to be closed). It was in the middle of a parking lot in the middle of a major university in the middle of a growing city. 100 years ago this temperature station was in the countryside, in essentially the open desert - no paving, no buildings, no cars. So we are getting the highest warming rates in the country by comparing a temperature today in an asphalt parking lot in the middle of a city to a temperature a hundred years ago in the open desert.

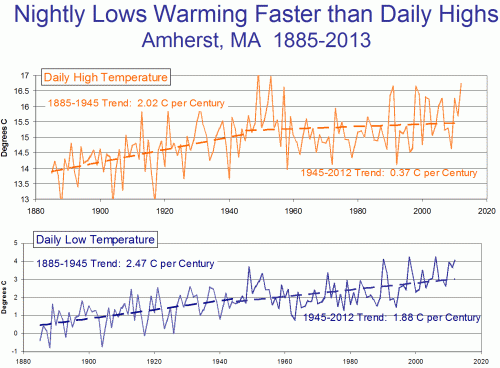

The problem with this is what's called the urban heat island effect. Buildings and concrete absorb heat from the sun during the day, more than would typically be absorbed by raw land in its natural state. This heat is reradiated at night, causing nights to be warmer in cities than in the areas surrounding them. If you live in a city, you will likely hear weather reports that predict colder temperatures in outlying areas, or warn of freezes in the countryside but not in the city itself.

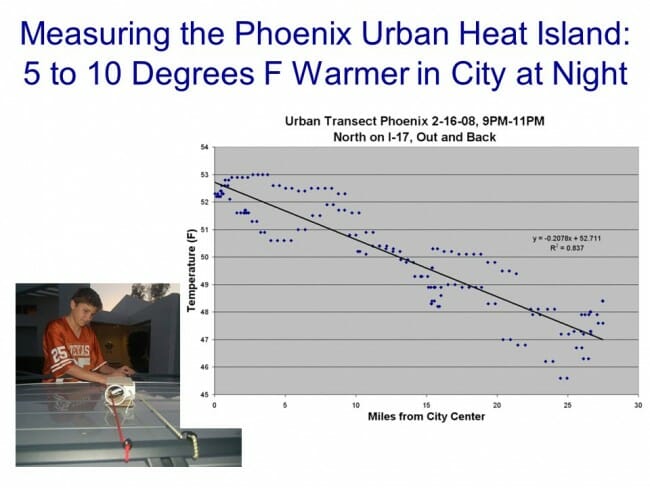

It turns out that this urban heat island effect is easily measured -- it even makes a great science fair project!

My son and I did this project years ago, attaching a small GPS and temperature probe to a car. We then drove out of the city center into the country and back in the early evening, when the urban heat island effect should be largest. We drove out and then back to average out any effects of overall cooling during our testing. One of the trips is shown above, with around 6 degrees F of temperature change. We, and most others who have done this in other cities, found between 5 and 10 degrees of warming as one drives into a city at night.

If this effect were constant over time, it would not pose too many problems for our purposes here, because we are looking at changes in average temperatures over time, not absolute values. But the urban heat island warming of a city (and particular temperature stations) increases as the urban area grows larger. Because this urban warming is many times the global warming signal we are trying to measure, and since most temperature stations are located near growing urban locations, it introduces an important potential bias into measurement.

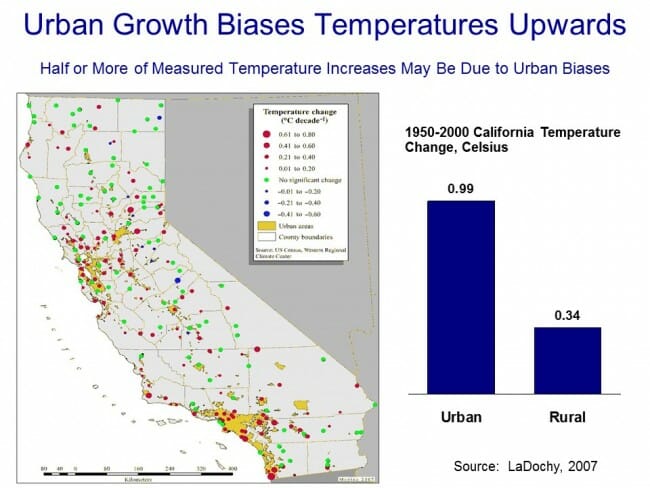

A number of studies have found that, in fact, we do indeed see more warming historically in thermometers located in urban areas than in those located in rural areas. Two studies in California have shown much lower warming rates at rural thermometers than at urban ones:

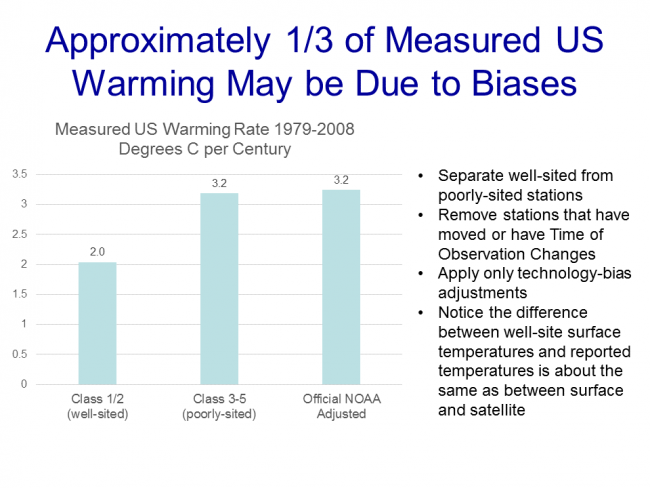

Anthony Watt has been working for years to do this same analysis for the entire US. In fact, the pictures taken above of the temperature station in Tucson were part of the first phase of his project to document each USHCN site used in the global warming statistics with pictures. Once he had pictures, he compared the details of the siting with a classification system scientists use to measure the quality of a temperature sites, from the best (class 1) to the worst with the most biases (class 5). He found that perhaps a third of the warming in the official NOAA numbers may come from the introduction of siting biases from bad sites. Or put another way, the warming at well-sited temperature stations was only about 2/3 in the official metric.

By the way, this is one other reason why I tend to favor the satellite measurements. Going back to the numbers we showed in part A, the satellite temperature metric had about 2/3 the trend of the surface temperature reading, or almost exactly what the surface readings would be if this siting bias were eliminated (the absolute values of the trends don't match, because they are for different time periods and different geographies).

There is one other aspect of this chart that might have caught your eye -- if some temperature stations are showing 2 degrees of warming and some 3.2 degrees of warming, why is the total 3.2 degrees of warming. Shouldn't it be somewhere in the middle?

One explanation is that the NOAA and other bodies take the data from these stations and perform a number of data manipulation steps in addition to a straight spatial averaging. One such step is that they will use a computer process to try to correct temperature stations based on the values from neighboring stations. The folks that run these indices argue that this computational process overcomes the site bias problem. Skeptics will argue that this approach is utter madness -- why work to correct a known bad temperature point, why not just eliminate it? If you have a good compass and a bad compass, you don't somehow mathematically average the results to find north, you throw out the bad one and use the good one. In short, skeptics argue that this approach does not eliminate the error, it just spreads the error around to all the good stations, smearing the error like peanut butter. Here is an example from the GISS, using station data that has only been adjusted for Time of Observation changes (TOBS).

This is exactly what we might expect - little warming out in undeveloped nature in Grand Canyon National Park, lots of warming in a large and rapidly growing modern city (yes, the Tucson data is from our favorite temperature station we featured above). Now, here is the same data after the GISS has adjusted it:

You can see that Tucson has been adjusted down a degree or two, but Grand Canyon has been adjusted up a degree or two (with the earlier mid-century spike adjusted down). OK, so it makes sense that Tucson has been adjusted down, though there is a very good argument to be made that it should be been adjusted down more, say by at least 3 degrees. But why does the Grand Canyon need to be adjusted up by about a degree and a half? What is currently biasing it colder by 1.5 degrees, which is a lot? One suspects the GISS is doing some sort of averaging, which is bringing the Grand Canyon and Tucson from each end closer to a mean -- they are not eliminating the urban bias from Tucson, they are just spreading it around to other stations in the region.

Temperature Adjustments and Signal-To-Noise Ratio

Nothing is less productive, to my mind, than when skeptics yell the word "fraud!" on the issue of temperature adjustments. All temperature databases include manual adjustments, even the satellite indices that many skeptics favor. As mentioned above, satellite measurements have to be adjusted for orbital decay of the satellites just as surface temperature measurements have to be adjusted for changes in the daily time of observation. We may argue that adjustment methodologies are wrong (as we did above with urban biases). We may argue that there are serious confirmation biases (nearly every single adjustment to every temperature and sea level and ocean heat database tends to cool the past and warm the present, perhaps reinforced by preconceived notions that we should be seeing a warming signal.) But I find that charges of fraud just cheapen the debate.

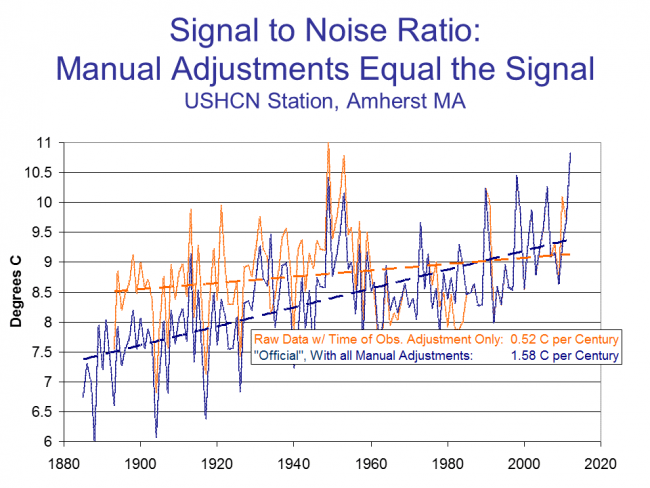

Even if the adjustments are all made the the best of intentions, we are still left with an enormous problem of signal to noise ratio. It turns out that the signal we are trying to measure -- warming over time -- is roughly equal to the magnitude of the manual adjustments. In other words, the raw temperature data does not show warming, only the manually adjusted data show warming. This does not mean the adjusted data is wrong, but it should make us substantially less confident that we are truly measuring the signal in all this noise of adjustment. Here are two examples, for an individual temperature station and for the entire database as a whole:

In this first example, we show the raw data (with Time of Observation adjustments only) in orange, and the final official adjusted version in blue. The adjustments triple the warming rate for the last century.

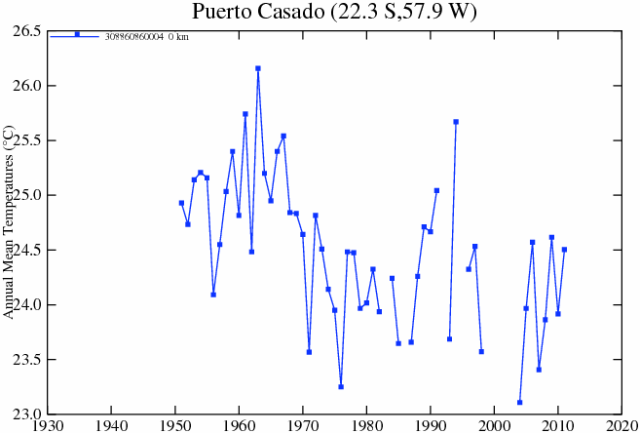

We can see something similar for the whole US, as raw temperature measurements (this time before time of observation adjustments) actually shows a declining temperature trend in the US. In this case, the entirety of the global warming signal, and more, comes from the manual adjustments. Do these adjustments (literally thousands and thousands of them) make sense when taken in whole? Does it make sense that there was some sort of warming bias in the 1920's that does not exist today? This is certainly an odd conclusion given that it implies a bias exactly opposite of the urban heat island effect.

We could go into much more detail, but this gives one an idea of why skeptics prefer the satellite measurements to the surface temperature record. Rather than endlessly working to try to get these public agencies to release their adjustment details and methodology for third party validation to the public that pays them (an ongoing task that still has not been entirely successful), skeptics have simply moved on to a better approach where the adjustments (to a few satellites) are much easier to manage.

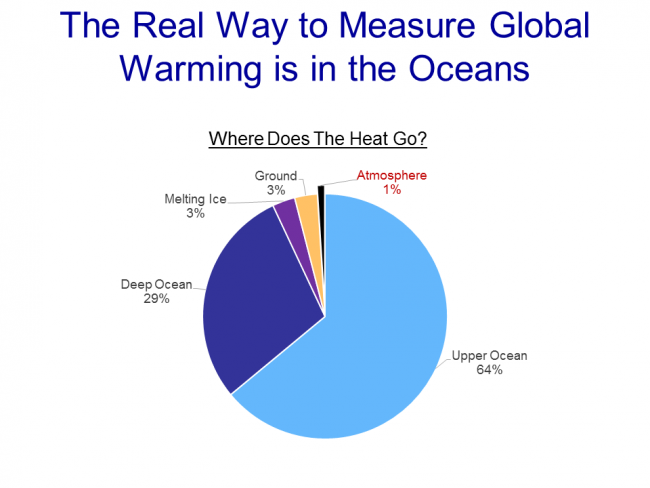

Ultimately, both approaches for seeking a global warming signal are a bit daft. Why? Because, according to the IPCC, of all the extra warming absorbed by the surface of the Earth from the greenhouse effect, only about 1% goes into the atmosphere:

Basically, water has a MUCH higher heat carrying capacity than air, and over 90% of any warming should be going into oceans. We are just starting to get some new tools for measuring the changes to ocean heat content, though the task is hard because we are talking about changes in the thousandths of a degree in the deep oceans.

After this brief digression into the surface temperature records, it is now time to get back to our main line of discussion. In the next chapter, we will begin to address the all-important attribution question: Of the warming we have seen in the past, how much is man-made?

Chapter 5, Part A on the question of attributing past warming to man is here.