Until I watched the Global Warming Swindle, I had confined my criticisms of anthropogenic global warming theory to two general areas: 1) The models for future warming are overstated and 2) The costs of warming may not justify the costs of preventing it.

The movie offered an alternate hypothesis about global warming and climate change that, rather than refute the magnitude of anthropogenic global warming, provided a counter hypothesis. You should watch the movie, but the counter hypothesis is that historic temperature changes have been the result of variations in solar activity. Rather than causing these changes, increased atmospheric CO2 levels resulted from these temperature increases, as rising ocean temperatures caused CO2 to be driven out of solution from the world's oceans.

I thought one of the more compelling charts from Al Gore's pPwerpoint deck, which made the movie An Invconvienent Truth, was the hundred thousand year close relationship between atmospheric CO2 levels and global temperature, as discovered in ice core analysis. The Swindle movie, however, claims that Gore is hiding something from that analysis in the scale of his chart -- that the same ice core analyses show that global temperature changes have led CO2 concentration changes by as much as 800 years. (short 2-minute snippet of this part of the movie here, highly recommended).

Well, this would certainly be something important to sort out. I have not done much real science since my physics days at Princeton, but my sense is that, except maybe at the quantum level, when B follows A it is hard to argue that B caused A.

So I have poked around a bit to see -- is this really what the ice core data shows, or is Swindle just making up facts or taking facts out of context ala the truther hypotheses about 9/11? Well, it turns out that everyone, even the die-hard global warming supporters, accept this 800-year lag as correct (Watch the Al Gore clip above -- it is clear he knows. You can tell by the very careful way he describes the relationship). LuboÃ…¡ Motl summarizes in his blog:

However, the most popular - and the most straightforward - explanation

of the direction of the causal relationship is the fact that in all

cases, the CO2 concentration only changed its trend roughly 800 years

after temperature had done the same thing. There have been many papers

that showed this fact and incidentally, no one seems to disagree with

it....

The whole "group" at RealClimate.ORG

[ed: one of the leading sites promoting the anthropogenic theory] has agreed that there was a lag. But they say that in the first 800

years when the influence of temperature on CO2 is manifest, it was

indeed temperature that drove the gases. But in the remaining 4200

years of the trend, it was surely the other way around: CO2 escalated

the warming, they say.

Frequent readers will know that I have criticized forward looking climate models on many occasions for being too reliant on positive feedback processes. For example, in the most recent IPCC models, over 2/3 of future warming come not from CO2 but from various positive feedback effects (section 8.6 of the 2007 report).

The folks at RealClimate.org are similarly positing a positive feedback mechanism in the past -- "something" causes initial warming, which drives CO2 to outgas from the oceans, which causes more warming, etc.

I am not sure I have ever done so, so let me take a minute to discuss positive feedbacks. This is something I know a fair amount about, since my specialization at school in mechanical engineering was in control theory and feedback processes. Negative feedback means that when you disturb an object or system in some way, forces tend to counteract this disturbance. Positive feedback means that the forces at work tend to reinforce or magnify a disturbance.

You can think of negative feedback as a ball sitting in the bottom of a bowl. Flick the ball in any direction, and the sides of the bowl, gravity, and friction will tend to bring the ball back to rest in the center of the bowl. Positive feedback is a ball balanced on the pointy tip of a mountain. Flick the ball, and it will start rolling faster and faster down the mountain, and end up a long way away from where it started with only a small initial flick.

Almost every process you can think of in nature operates by negative feedback. Roll a ball, and eventually friction and wind resistance bring it to a stop (except, apparently, on the greens at Augusta). There is a good reason for this. Positive feedback breeds instability, and processes that operate by positive feedback are dangerous, and usually end up in extreme states. These processes tend to "run away." I can illustrate this with an example: Nuclear fission is a positive feedback process. A high energy neutron causes the fission reaction, which produces multiple high energy neutrons that can cause more fission. It is a runaway process, it is dangerous and unstable. We should be happy there are not more positive feedback processes on our planet.

Since negative feedback processes are much more common, and since positive feedback processes almost never yield a stable system, scientists assume that processes they meet are negative feedback until proven otherwise. Except in climate, it seems, where everyone assumes positive feedback is common.

Back to the climate question. The anthropogenic guys are saying that when the earth heated, it caused CO2 to outgas from the oceans, which in turn caused more warming, which causes more outgassing, etc. But where does it stop? If this is really how things work, why isn't the Earth more like Venus? If you are going to posit such a runaway process, you have to also posit what stops it. So far, the only thing I can think of is that the process would stop when the all bands of light that are absorbable by CO2 are fully saturated.

But the feedback is worse than this. I won't go into it now, but as you can see from this post, or from section 8.6 of the 2007 IPCC report, the current climate models assume that warming from CO2 itself yields further positive feedback effects (e.g. more humidity) that further accelerate warming, acting as a multiplier as great as 3-times on CO2 effects alone.

So here is the RealClimate view of the world: Any small warming from some outside source (think Mr. Sun) is accelerated by outgassing CO2 which is in turn accelerated by these other effects in their climate models. In other words, global temperature is a ball sitting perched on the top of a mountain, and the smallest nudge causes it to accelerate away. This is the point at which, despite having only limited knowledge about the climate, I have to call bullshit! There is just no way our planet's climate could be as stable as it has been long-term and be built on such positive feedback loops. No way. Either these folks are over-estimating the positive feedback or ignoring negative feedbacks or both. (and yes, I know we have had ice ages and such but against the backdrop of the range of temperatures the Earth theoretically could have in different situations, our climate variation has been small).

Postscript: The other day I mentioned that it was funny a group studying solar output felt the need to put in a statement validating anthropogenic global warming despite the fact that nothing in their research said any such thing. Motl points to a similar thing in the ice core studies:

Well, the website tells us that the paper that reported the lag contained the following sentence:

- ...

is still in full agreement with the idea that CO2 plays, through its

greenhouse effect, a key role in amplifying the initial orbital forcing

...

Again, this statement was included despite the fact that their study pretty clearly refutes some key premises in anthropogenic global warming theory. It's become a phrase like "no animal was hurt in the filming of this movie" that you have to append to every climate study. Or, probably a better analogy, it is like Copernicus spending a few chapters assuring everyone he still believes in God and the Bible before he lays out his heliocentric view of the solar system.

Update: All this is not to say that there are not positive feedback loops in climate. Ice albedo is probably one -- as temperatures rise, ice melts and less sunlight is reflected back into space by the ice so the world warms more. My point is that it does not make any sense to say that positive feedback processes dominate.

Correction: Like a moron, I have been using anthropomorphic rather than anthropogenic to refer to man-made climate effects. Oops. Thanks to my reader who corrected me. I have fixed this article but am too lazy to go back and edit the past.

Further Update: The irony of my correction above juxtaposed against the title of the previous post is not lost on me.

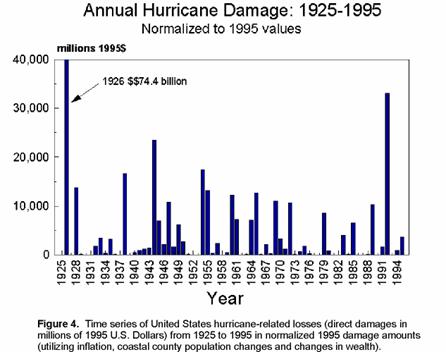

Update to the Postscript: Oh my god, here it is again. An NOAA-funded study comes to the conclusion that global warming might actually reduce hurricane strength and frequency. Nowhere in the study did the researchers touch any topic related to anthropogenic warming -- they just studied what might happen to hurricanes if the world warms for any reason. But here is that disclaimer again:

"This study does not, in any way, undermine the widespread consensus in the scientific community about the reality of global warming," said co-author Brian Soden, Rosenstiel School associate professor of meteorology and physical oceanography whose research is partly funded by NOAA.

Does the NOAA and other funding bodies actually require that this boilerplate be added to every study?