Russ Roberts over at Cafe Hayek quotes from a Cathy O’Neill review of Nate Silvers recent book:

Silver chooses to focus on individuals working in a tight competition and their motives and individual biases, which he understands and explains well. For him, modeling is a man versus wild type thing, working with your wits in a finite universe to win the chess game.

He spends very little time on the question of how people act inside larger systems, where a given modeler might be more interested in keeping their job or getting a big bonus than in making their model as accurate as possible.

In other words, Silver crafts an argument which ignores politics. This is Silver’s blind spot: in the real world politics often trump accuracy, and accurate mathematical models don’t matter as much as he hopes they would....

My conclusion: Nate Silver is a man who deeply believes in experts, even when the evidence is not good that they have aligned incentives with the public.

Distrust the experts

Call me “asinine,” but I have less faith in the experts than Nate Silver: I don’t want to trust the very people who got us into this mess, while benefitting from it, to also be in charge of cleaning it up. And, being part of the Occupy movement, I obviously think that this is the time for mass movements.

Like Ms. O'Neill, I distrust "authorities" as well, and have a real problem with debates that quickly fall into dueling appeals to authority. She is focusing here on overt politics, but subtler pressure and signalling are important as well. For example, since "believing" in climate alarmism in many circles is equated with a sort of positive morality (and being skeptical of such findings equated with being a bad person) there is an underlying peer pressure that is different from overt politics but just as damaging to scientific rigor. Here is an example from the comments at Judith Curry's blog discussing research on climate sensitivity (which is the temperature response predicted if atmospheric levels of CO2 double).

While many estimates have been made, the consensus value often used is ~3°C. Like the porridge in “The Three Bears”, this value is just right – not so great as to lack credibility, and not so small as to seem benign.

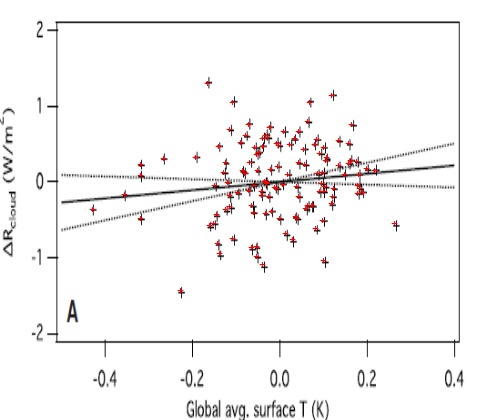

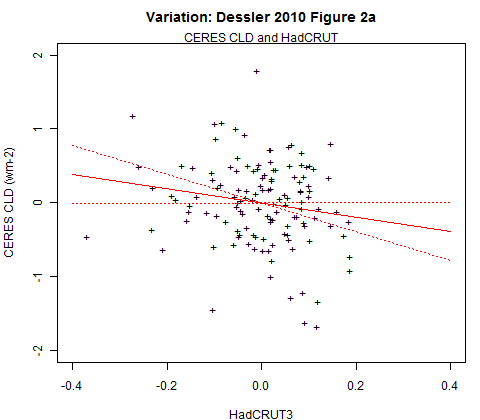

Huybers (2010) showed that the treatment of clouds was the “principal source of uncertainty in models”. Indeed, his Table I shows that whereas the response of the climate system to clouds by various models varied from 0.04 to 0.37 (a wide spread), the variation of net feedback from clouds varied only from 0.49 to 0.73 (a much narrower relative range). He then examined several possible sources of compensation between climate sensitivity and radiative forcing. He concluded:

“Model conditioning need not be restricted to calibration of parameters against observations, but could also include more nebulous adjustment of parameters, for example, to fit expectations, maintain accepted conventions, or increase accord with other model results. These more nebulous adjustments are referred to as ‘tuning’.” He suggested that one example of possible tuning is that “reported values of climate sensitivity are anchored near the 3±1.5°C range initially suggested by the ad hoc study group on carbon dioxide and climate (1979) and that these were not changed because of a lack of compelling reason to do so”.

Huybers (2010) went on to say:

“More recently reported values of climate sensitivity have not deviated substantially. The implication is that the reported values of climate sensitivity are, in a sense, tuned to maintain accepted convention.”

Translated into simple terms, the implication is that climate modelers have been heavily influenced by the early (1979) estimate that doubling of CO2 from pre-industrial levels would raise global temperatures 3±1.5°C. Modelers have chosen to compensate their widely varying estimates of climate sensitivity by adopting cloud feedback values countering the effect of climate sensitivity, thus keeping the final estimate of temperature rise due to doubling within limits preset in their minds.

There is a LOT of bad behavior out there by models. I know that to be true because I used to be a modeler myself. What laymen do not understand is that it is way too easy to tune and tweak and plug models to get a preconceived answer -- and the more complex the model, the easier this is to do in a non-transparent way. Here is one example, related again to climate sensitivity

When I looked at historic temperature and CO2 levels, it was impossible for me to see how they could be in any way consistent with the high climate sensitivities that were coming out of the IPCC models. Even if all past warming were attributed to CO2 (a heroic assertion in and of itself) the temperature increases we have seen in the past imply a climate sensitivity closer to 1 rather than 3 or 5 or even 10 (I show this analysis in more depth in this video).

My skepticism was increased when several skeptics pointed out a problem that should have been obvious. The ten or twelve IPCC climate models all had very different climate sensitivities — how, if they have different climate sensitivities, do they all nearly exactly model past temperatures? If each embodies a correct model of the climate, and each has a different climate sensitivity, only one (at most) should replicate observed data. But they all do. It is like someone saying she has ten clocks all showing a different time but asserting that all are correct (or worse, as the IPCC does, claiming that the average must be the right time).

The answer to this paradox came in a 2007 study by climate modeler Jeffrey Kiehl. To understand his findings, we need to understand a bit of background on aerosols. Aerosols are man-made pollutants, mainly combustion products, that are thought to have the effect of cooling the Earth’s climate.

What Kiehl demonstrated was that these aerosols are likely the answer to my old question about how models with high sensitivities are able to accurately model historic temperatures. When simulating history, scientists add aerosols to their high-sensitivity models in sufficient quantities to cool them to match historic temperatures. Then, since such aerosols are much easier to eliminate as combustion products than is CO2, they assume these aerosols go away in the future, allowing their models to produce enormous amounts of future warming.

Specifically, when he looked at the climate models used by the IPCC, Kiehl found they all used very different assumptions for aerosol cooling and, most significantly, he found that each of these varying assumptions were exactly what was required to combine with that model’s unique sensitivity assumptions to reproduce historical temperatures. In my terminology, aerosol cooling was the plug variable.

By the way, this aerosol issue is central to recent work that is pointing to a much lower climate sensitivity to CO2 than has been reported in past IPCC reports.