Kevin Drum thinks he has a killer analysis supporting government health care. In a post he titles sarcastically "Best Healthcare In the World, Baby," Drum shares this chart:

The implication is that the US has the worst healthcare system, because, according to this study, the US has the highest rates of "amenable mortality," defined as deaths that are "potentially preventable with timely and effective health care."

I get caught from time to time linking to studies that turn out to have crappy methodology. However, I do try to do a little due diligence each time to at least look at their approach, particularly when the authors are claiming to measure something so non-objective as mortality that was "potentially preventable."

So, when in doubt, let's look at what the author's have to say about their methodology. The press release is here, which gets us nowhere. From there, though, one can link to here and then download the article from Health Affairs via pdf (the site is gated but I found that if you go through the press release site you can get in for free).

The wording of the study and the chart as quoted by Mr. Drum seem to imply that someone has gone through a sampling of medical histories to look at deaths to decide if they were preventable deaths. Some studies like this have been conducted. This is not one of them. The authors do not look at any patient data.

Here is what they actually did: They arbitrarily defined a handful of conditions as "amenable" to care. These are:

Ischemic Heart Disease (IHD)

Other circulatory diseases

Neoplasms (some cancers)

Diabetes

Respiratory diseases

Surgical conditions and medical errors

Infectious Diseases.

Perinatal, congenital, and maternal conditions

Other (very small)

All the study does is show how many people died in each country from this set of diseases and conditions. Period. It doesn't determine if they got care or if they in particular could have been saved, but just that they died of one of the above list of conditions. This study was not an effort to identify people who died when their particular condition should have been preventable or amenable to care; all it measures is the number of people in each country who died from list of conditions. If Joe is talking to me and in the next second flops over instantly dead of a massive heart attack, the author's consider him to have died of a disease amenable to care.

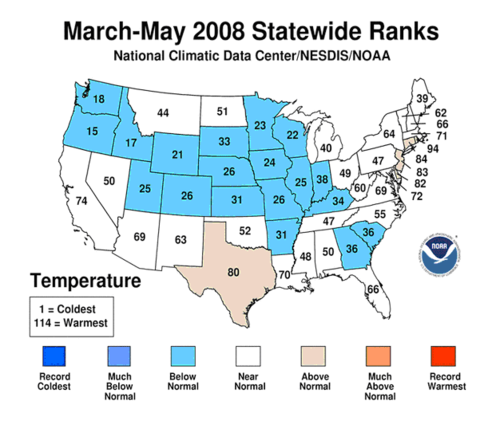

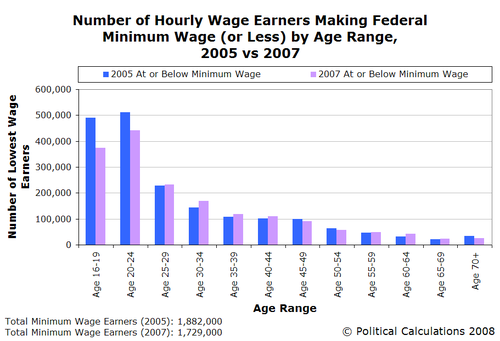

We can learn something by looking at the breakdown of the data. If you can't read the table below, click on it for a larger version

Let's take the data for men. The study makes a big point of saying that France is much better than the US, so we will use those two countries. In 2003, France has an "amenable disease" death rate 56 points lower than the US. But we can see that almost this whole gap, or 42 points of it, comes from heart and circulatory diseases. The incidence of these diseases are highly related to diet and lifestyle. In fact, it is well established that the US has a comparatively high incidence rate of these diseases, much higher than France. This makes it entirely possible that this mortality difference is entirely due to lifestyle differences and disease incidence rates rather than the relative merits of health care systems. In fact, this study is close to meaningless. If they really wanted to make a point about the quality of health care systems, they would compare them on relative mortality with a denominator of the disease incidence rate, not a denominator of total population.

But in their discussion, the study's authors reveal themselves to be, if I am reading them right, complete idiots in terms of statistical methods. The authors acknowledge that lifestyle differences may be a problem in their data. This is how they say they solved this problem:

It is important to recognize that the development of any list of indicators of amenable mortality involves a degree of judgment, as a death from any cause is typically the final event in a complex chain of processes that include issues related to underlying social and economic factors, lifestyles, and preventive and curative health care. As a consequence, interpretation of findings requires an understanding of the natural history and scope for prevention and treatment of the condition in question. Thus, in the case of IHD, we find accumulating evidence that suggests that advances in health care have contributed to declining mortality from this condition in many countries, yet it is equally clear that large international differences in mortality predated the advent of effective health care, reflecting factors such as diet and rates of smoking and physical activity.16 To account for this variation, we included only half of the mortality from IHD, although, based on the available evidence, figures between, say, 25 percent and 70 percent would be equally justifiable.

I have a very smart reader group, so my sense is that many of you already see the gaffe here. The author's posit that 50% of heart disease may be due to lifestyle, though the number might be higher or lower. So to correct for this, they reduce every country's heart disease number (IHD) by a fixed amount of 50%. WTF?? This corrects for NOTHING. All this does is reduce the weighting of IHD in the total measure.

Look, if the problem is that lifestyle contribution to heart disease varies by country, then the percentage of IHD deaths that need to be removed because the deaths are lifestyle related will vary by country. If the US has the "worst" lifestyle, and the number for lifestyle deaths is about 50% there, it is going to be less than 50% in every country. The correction, if an accurate one could be created, needs to be applied to the variance between nations, not to the base numbers. Careful multiple regressions might or might not have sorted the two sets of causes apart, but dividing by 50% doesn't do anything. This mistake is not just wrong, it is LAUGHABLE, and calls into question the author's qualification to say anything on this topic. They may be fine doctors, but they don't know squat about data analysis.

There may be nuggets of concern for the US lurking in this data. I don't know how they measure deaths from surgical conditions and medical errors, but its not good to be higher on this. Though again, you have to be careful. The US has far more surgeries than most other countries per capita, so we have more surgical deaths. Also, medical error data is notoriously difficult to compare country to country because reporting standards and processes are so different. In the US, when the government measures medical errors, it is a neutral third party to the error. In Europe, the government, as healthcare provider, is often the source of the error, calling into question how aggressive these countries may be in defining "an error." Infant mortality data is a good example of such a trap. The US often looks worse than European nations on infant mortality because it is defined as infant deaths as a percentage of live births. But the US has the most advanced neo-natal capabilities in the world. Many pregnancies that would result in a "born dead" in other countries result in a live birth in the US. Since these rescued births are much more problematic, their death rate is much higher.

There is good news for the US in the study. The item on this list most amenable to intensive medical intervention is cancer (neoplasms in the study above). In that category, despite a higher incidence rate than many of these countries, the US has one of the lowest mortality rates as a percentage of the total population, which implies that our cancer mortality in the US as a percentage of cancer incidence is much better than these countries. This shows our much higher 5-year cancer survival rates.

Update: I thought this was pretty clear, but some of the commenters are confused. The halving of IHD numbers was applied to all countries, not just the US. So the actual male US IHD number is about 100 before halving and the actual French number is about 40. Again, this halving only reduces the weighting of IHD in the total index; it in no way corrects for differences in incidence rate.

Wall Street Journal

Wall Street Journal