Some Final Thoughts on The NASA Temperature Restatement

I got a lot of traffic this weekend from folks interested in the US historical temperature restatement at NASA-GISS. I wanted to share to final thoughts and also respond to a post at RealClimate.org (the #1 web cheerleader for catastrophic man-made global warming theory).

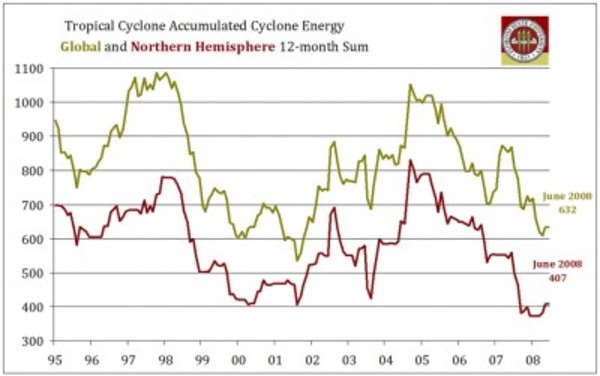

- This restatement does not mean that the folks at GISS are necessarily wrong when they say the world has been warming over the last 20 years. We know from the independent source of satellite measurements that the Northern Hemisphere has been warming (though not so much in the Southern Hemisphere). However, surface temperature measurements, particularly as "corrected" and aggregated at the GISS, have always been much higher than the satellite readings. (GISS vs Satellite) This incident may start to give us an insight into how to bring those two sources into agreement.

- For years, Hansen's group at GISS, as well as other leading climate scientists such as Mann and Briffa (creators of historical temperature reconstructions) have flaunted the rules of science by holding the details of their methodologies and algorithm's secret, making full scrutiny impossible. The best possible outcome of this incident will be if new pressure is brought to bear on these scientists to stop saying "trust me" and open their work to their peers for review. This is particularly important for activities such as Hansen's temperature data base at GISS. While measurement of temperature would seem straight forward, in actual fact the signal to noise ration is really low. Upward "adjustments" and fudge factors added by Hansen to the actual readings dwarf measured temperature increases, such that, for example, most reported warming in the US is actually from these adjustments, not measured increases.

- In a week when Newsweek chose to argue that climate skeptics need to shut up, this incident actually proves why two sides are needed for a quality scientific debate. Hansen and his folks missed this Y2K bug because, as a man-made global warming cheerleader, he expected to see temperatures going up rapidly so he did not think to question the data. Mr. Hansen is world-famous, is a friend of luminaries like Al Gore, gets grants in quarter million dollar chunks from various global warming believers. All his outlook and his incentives made him want the higher temperatures to be true. It took other people with different hypotheses about climate to see the recent temperature jump for what it was: An error.

The general response at RealClimate.org has been: Nothing to see here, move along.

Among other incorrect stories going around are that the mistake was due

to a Y2K bug or that this had something to do with photographing

weather stations. Again, simply false.

I really, really don't think it matters exactly how the bug was found, except to the extent that RealClimate.org would like to rewrite history and convince everyone this was just a normal adjustment made by the GISS themselves rather than a mistake found by an outsider. However, just for the record, the GISS, at least for now until they clean up history a bit, admits the bug was spotted by Steven McIntyre. Whatever the bug turned out to be, McIntyre initially spotted it as a discontinuity that seemed to exist in GISS data around the year 2000. He therefore hypothesized it was a Y2K bug, but he didn't know for sure because Hansen and the GISS keep all their code as a state secret. And McIntyre himself says he became aware of the discontinuity during a series of posts that started from a picture of a weather station at Anthony Watts blog. I know because I was part of the discussion, talking to these folks online in real time. Here is McIntyre explaining it himself.

In sum, the post on RealClimate says:

Sum total of this change? A couple of hundredths of degrees in the US

rankings and no change in anything that could be considered

climatically important (specifically long term trends).

A bit of background - surface temperature readings have read higher than satellite readings of the troposphere, when the science of greenhouse gases says the opposite should be true. Global warming hawks like Hansen and the GISS have pounded on the satellite numbers, investigating them 8 ways to Sunday, and have on a number of occasions trumpeted upward corrections to satellite numbers that are far smaller than these downward corrections to surface numbers.

But yes, IF this is the the only mistake in the data, then this is a mostly correct statement from RealClimate.org.. However, here is my perspective:

- If a mistake of this magnitude can be found by outsiders without access to Hansen's algorithm's or computer code just by inspection of the resulting data, then what would we find if we could actually inspect the code? And this Y2K bug is by no means the only problem. I have pointed out several myself, including adjustments for urbanization and station siting that make no sense, and averaging in rather than dropping bad measurement locations.

- If we know significant problems exist in the US temperature monitoring network, what would we find looking at China? Or Africa? Or South America. In the US and a few parts of Europe, we actually have a few temperature measurement points that were rural in 1900 and rural today. But not one was measuring rural temps in these other continents 100 years ago. All we have are temperature measurements in urban locations where we can only guess at how to adjust for the urbanization. The problem in these locations, and why I say this is a low signal to noise ratio measurement, is that small percentage changes in our guesses for how much the urbanization correction should be make enormous changes (even to changing the sign) of historic temperature change measurements.

Here are my recommendations:

- NOAA and GISS both need to release their detailed algorithms and computer software code for adjusting and aggregating USHCN and global temperature data. Period. There can be no argument. Folks at RealClimate.org who believe that all is well should be begging for this to happen to shut up the skeptics. The only possible reason for not releasing this scientific information that was created by government employees with taxpayer money is if there is something to hide.

- The NOAA and GISS need to acknowledge that their assumptions of station quality in the USHCN network are too high, and that they need to incorporate actual documented station condition (as done at SurfaceStations.org) in their temperature aggregations and corrections. In some cases, stations like Tucson need to just be thrown out of the USHCN. Once the US is done, a similar effort needs to be undertaken on a global scale, and the effort needs to include people whose incentives and outlook are not driven by making temperatures read as high as possible.

- This is the easiest of all. Someone needs to do empirical work (not simulated, not on the computer, but with real instruments) understanding how various temperature station placements affect measurements. For example, how do the readings of an instrument in an open rural field compare to an identical instrument surrounded by asphalt a few miles away? These results can be used for step #2 above. This is cheap, simple research a couple of graduate students could do, but climatologists all seem focused on building computer models rather than actually doing science.

- Similar to #3, someone needs to do a definitive urban heat island study, to find out how much temperature readings are affected by urban heat, again to help correct in #2. Again, I want real research here, with identical instruments placed in various locations and various radii from an urban center (not goofy proxys like temperature vs. wind speed -- that's some scientist who wants to get a result without ever leaving his computer terminal). Most studies have shown the number to be large, but a couple of recent studies show smaller effects, though now these studies are under attack not just for sloppiness but outright fabrication. This can't be that hard to study, if people were willing to actually go into the field and take measurements. The problem is everyone is trying to do this study with available data rather than by gathering new data.

Postscript: The RealClimate post says:

However, there is clearly a latent and deeply felt wish in some sectors for the whole problem of global warming to be reduced to a statistical quirk or a mistake.

If catastrophic man-made global warming theory is correct, then man faces a tremendous lose-lose. Either shut down growth, send us back to the 19th century, making us all substantially poorer and locking a billion people in Asia into poverty they are on the verge of escaping, or face catastrophic and devastating changes in the planet's weather.

Now take two people. One in his heart really wants this theory not to be true, and hopes we don't have to face this horrible lose-lose tradeoff. The other has a deeply felt wish that this theory is true, and hopes man does face this horrible future. Which person do you like better? And recognize, RealClimate is holding up the latter as the only moral man.

Update: Don't miss Steven McIntyre's take from the whole thing. And McIntyre responds to Hansen here.