Knowledge and Certainty "Laundering" Via Computer Models

Today I want to come back to a topic I have not covered for a while, which is what I call knowledge or certainty "laundering" via computer models. I will explain this term more in a moment, but I use it to describe the use of computer models (by scientists and economists but with strong media/government/activist collusion) to magically convert an imperfect understanding of a complex process into apparently certain results and predictions to two-decimal place precision.

The initial impetus to revisit this topic was reading "Chameleons: The Misuse of Theoretical Models in Finance and Economics" by Paul Pfleiderer of Stanford University (which I found referenced in a paper by Anat R. Admati on dangers in the banking system). I will except this paper in a moment, and though he is talking more generically about theoretical models (whether embodied in code or not), I think a lot of his paper is relevant to this topic.

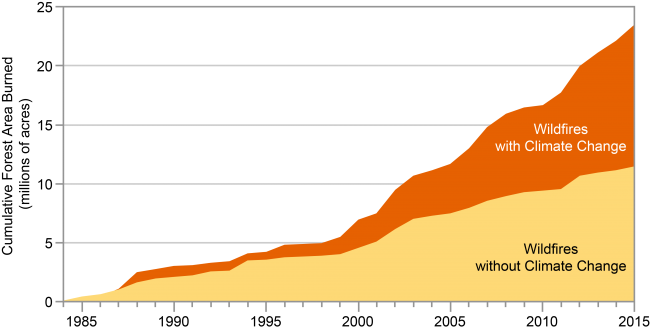

Before we dig into it, let's look at the other impetus for this post, which was my seeing this chart in the "Southwest" section of the recent Fourth National Climate Assessment.

The labelling of the chart actually understates the heroic feat the authors achieved as their conclusion actually models wildfire with and without anthropogenic climate change. This means that first they had to model the counterfactual of what the climate could have been like without the 30ppm (0.003% of the atmosphere) CO2 added in the period. Then, they had to model the counterfactual of what the wildfire burn acreage would have been under the counter-factual climate vs. what actually occurred. All while teasing out the effects of climate change from other variables like forest management and fuel reduction policy (which --oddly enough -- despite substantial changes in this period apparently goes entirely unmentioned in the underlying study and does not seem to be a variable in their model). And they do all this for every year back to the mid-1980's.

Don't get me wrong -- this is a perfectly reasonable analysis to attempt, even if I believe they did it poorly and am skeptical you can get good results in any case (and even given the obvious fact that the conclusions are absolutely not testable in any way). But any critique I might have is a normal part of the scientific process. I critique, then if folks think it is valid they redo the analysis fixing the critique, and the findings might hold or be changed. The problem comes further down the food chain:

- When the media, and in this case the US government, uses this analysis completely uncritically and without any error bars to pretend at certainty -- in this case that half of the recent wildfire damage is due to climate change -- that simply does not exist

- And when anything that supports the general theory that man-made climate change is catastrophic immediately becomes -- without challenge or further analysis -- part of the "consensus" and therefore immune from criticism.

I like to compare climate models to economic models, because economics is the one other major field of study where I think the underlying system is as nearly complex as the climate. Readers know I accept that man is causing some warming via CO2 -- I am a lukewarmer who has proposed a carbon tax. However, as an engineer whose undergraduate work focused on the dynamics of complex systems, I go nuts with anti-scientific statements like "Co2 is the control knob for the Earth's climate." It is simply absurd to say that an entire complex system like climate is controlled by a single variable, particularly one that is 0.04% of the atmosphere. If a sugar farmer looking for a higher tariff told you that sugar production was the single control knob for the US climate, you would call BS on them in a second (sugar being just 0.015% by dollars of a tremendously complex economy).

But in fact, economists play at these same sorts of counterfactuals. I wrote about economic analysis of the effects of the stimulus way back in 2010. It is very similar to the wildfire analysis above in that it posits a counter-factual and then asserts the difference between the modeled counterfactual and reality is due to one variable.

Last week the Council of Economic Advisors (CEA) released its congressionally commissioned study on the effects of the 2009 stimulus. The panel concluded that the stimulus had created as many as 3.6 million jobs, an odd result given the economy as a whole actually lost something like 1.5 million jobs in the same period. To reach its conclusions, the panel ran a series of complex macroeconomic models to estimate economic growth assuming the stimulus had not been passed. Their results showed employment falling by over 5 million jobs in this hypothetical scenario, an eyebrow-raising result that is impossible to verify with actual observations.

Most of us are familiar with using computer models to predict the future, but this use of complex models to write history is relatively new. Researchers have begun to use computer models for this sort of retrospective analysis because they struggle to isolate the effect of a single variable (like stimulus spending) in their observational data. Unless we are willing to, say, give stimulus to South Dakota but not North Dakota, controlled experiments are difficult in the macro-economic realm.

But the efficacy of conducting experiments within computer models, rather than with real-world observation, is open to debate. After all, anyone can mine data and tweak coefficients to create a model that accurately depicts history. One is reminded of algorithms based on skirt lengths that correlated with stock market performance, or on Washington Redskins victories that predicted past presidential election results.

But the real test of such models is to accurately predict future events, and the same complex economic models that are being used to demonstrate the supposed potency of the stimulus program perform miserably on this critical test. We only have to remember that the Obama administration originally used these same models barely a year ago to predict that unemployment would remain under 8% with the stimulus, when in reality it peaked over 10%. As it turns out, the experts' hugely imperfect understanding of our complex economy is not improved merely by coding it into a computer model. Garbage in, garbage out.

Thus we get to my concept I call knowledge laundering or certainty laundering. I described what I mean by this back in the blogging dinosaur days (note this is from 2007 so my thoughts on climate have likely evolved since then).

Remember what I said earlier: The models produce the result that there will be a lot of anthropogenic global warming in the future because they are programmed to reach this result. In the media, the models are used as a sort of scientific money laundering scheme. In money laundering, cash from illegal origins (such as smuggling narcotics) is fed into a business that then repays the money back to the criminal as a salary or consulting fee or some other type of seemingly legitimate transaction. The money he gets

back is exactly the same money, but instead of just appearing out of nowhere, it now has a paper-trail and appears more legitimate. The money has been laundered.In the same way, assumptions of dubious quality or certainty that presuppose AGW beyond the bounds of anything we have see historically are plugged into the models, and, shazam, the models say that there will be a lot of anthropogenic global warming. These dubious assumptions, which are pulled out of thin air, are laundered by being passed through these complex black boxes we call climate models and suddenly the results are somehow scientific proof of AGW. The quality hasn't changed, but the paper trail looks better, at least in the press. The assumptions begin as guesses of dubious quality and come out laundered at "settled science."

Back in 2011, I highlighted a climate study that virtually admitted to this laundering via model by saying:

These question cannot be answered using observations alone, as the available time series are too short and the data not accurate enough. We therefore used climate model output generated in the ESSENCE project, a collaboration of KNMI and Utrecht University that generated 17 simulations of the climate with the ECHAM5/MPI-OM model to sample the natural variability of the climate system. When compared to the available observations, the model describes the ocean temperature rise and variability well.”

I wrote in response:

[Note the first and last sentences of this paragraph] First, that there is not sufficiently extensive and accurate observational data to test a hypothesis. BUT, then we will create a model, and this model is validated against this same observational data. Then the model is used to draw all kinds of conclusions about the problem being studied.

This is the clearest, simplest example of certainty laundering I have ever seen. If there is not sufficient data to draw conclusions about how a system operates, then how can there be enough data to validate a computer model which, in code, just embodies a series of hypotheses about how a system operates?

A model is no different than a hypothesis embodied in code. If I have a hypothesis that the average width of neckties in this year’s Armani collection drives stock market prices, creating a computer program that predicts stock market prices falling as ties get thinner does nothing to increase my certainty of this hypothesis (though it may be enough to get me media attention). The model is merely a software implementation of my original hypothesis. In fact, the model likely has to embody even more unproven assumptions than my hypothesis, because in addition to assuming a causal relationship, it also has to be programmed with specific values for this correlation.

This brings me to the paper by Paul Pfleiderer of Stanford University. I don't want to overstate the congruence between his paper and my thoughts on this, but it is the first work I have seen to discuss this kind of certainty laundering (there may be a ton of literature on this but if so I am not familiar with it). His abstract begins:

In this essay I discuss how theoretical models in finance and economics are used in ways that make them “chameleons” and how chameleons devalue the intellectual currency and muddy policy debates. A model becomes a chameleon when it is built on assumptions with dubious connections to the real world but nevertheless has conclusions that are uncritically (or not critically enough) applied to understanding our economy.

The paper is long and nuanced but let me try to summarize his thinking:

In this essay I discuss how theoretical models in finance and economics are used in ways that make them “chameleons” and how chameleons devalue the intellectual currency and muddy policy debates. A model becomes a chameleon when it is built on assumptions with dubious connections to the real world but nevertheless has conclusions that are uncritically (or not critically enough) applied to understanding our economy....

My reason for introducing the notion of theoretical cherry picking is to emphasize that since a given result can almost always be supported by a theoretical model, the existence of a theoretical model that leads to a given result in and of itself tells us nothing definitive about the real world. Though this is obvious when stated baldly like this, in practice various claims are often given credence — certainly more than they deserve — simply because there are theoretical models in the literature that “back up” these claims. In other words, the results of theoretical models are given an ontological status they do not deserve. In my view this occurs because models and specifically their assumptions are not always subjected to the critical evaluation necessary to see whether and how they apply to the real world...

As discussed above one can develop theoretical models supporting all kinds of results, but many of these models will be based on dubious assumptions. This means that when we take a bookshelf model off of the bookshelf and consider applying it to the real world, we need to pass it through a filter, asking straightforward questions about the reasonableness of the assumptions and whether the model ignores or fails to capture forces that we know or have good reason to believe are important.

I know we see a lot of this in climate:

A chameleon model asserts that it has implications for policy, but when challenged about the reasonableness of its assumptions and its connection with the real world, it changes its color and retreats to being a simply a theoretical (bookshelf) model that has diplomatic immunity when it comes to questioning its assumptions....

Chameleons arise and are often nurtured by the following dynamic. First a bookshelf model is constructed that involves terms and elements that seem to have some relation to the real world and assumptions that are not so unrealistic that they would be dismissed out of hand. The intention of the author, let’s call him or her “Q,” in developing the model may be to say something about the real world or the goal may simply be to explore the implications of making a certain set of assumptions. Once Q’s model and results become known, references are made to it, with statements such as “Q shows that X.” This should be taken as short-hand way of saying “Q shows that under a certain set of assumptions it follows (deductively) that X,” but some people start taking X as a plausible statement about the real world. If someone skeptical about X challenges the assumptions made by Q, some will say that a model shouldn’t be judged by the realism of its assumptions, since all models have assumptions that are unrealistic. Another rejoinder made by those supporting X as something plausibly applying to the real world might be that the truth or falsity of X is an empirical matter and until the appropriate empirical tests or analyses have been conducted and have rejected X, X must be taken seriously. In other words, X is innocent until proven guilty. Now these statements may not be made in quite the stark manner that I have made them here, but the underlying notion still prevails that because there is a model for X, because questioning the assumptions behind X is not appropriate, and because the testable implications of the model supporting X have not been empirically rejected, we must take X seriously. Q’s model (with X as a result) becomes a chameleon that avoids the real world filters.

Check it out if you are interested. I seldom trust a computer model I did not build and I NEVER trust a model I did build (because I know the flaws and assumptions and plug variables all too well).

By the way, the mention of plug variables reminds me of one of the most interesting studies I have seen on climate modeling, by Kiel in 2007. It was so damning that I haven't seen anyone do it since (at least get published doing it). I wrote about it in 2011 at Forbes:

My skepticism was increased when several skeptics pointed out a problem that should have been obvious. The ten or twelve IPCC climate models all had very different climate sensitivities -- how, if they have different climate sensitivities, do they all nearly exactly model past temperatures? If each embodies a correct model of the climate, and each has a different climate sensitivity, only one (at most) should replicate observed data. But they all do. It is like someone saying she has ten clocks all showing a different time but asserting that all are correct (or worse, as the IPCC does, claiming that the average must be the right time).

The answer to this paradox came in a 2007 study by climate modeler Jeffrey Kiehl. To understand his findings, we need to understand a bit of background on aerosols. Aerosols are man-made pollutants, mainly combustion products, that are thought to have the effect of cooling the Earth's climate.

What Kiehl demonstrated was that these aerosols are likely the answer to my old question about how models with high sensitivities are able to accurately model historic temperatures. When simulating history, scientists add aerosols to their high-sensitivity models in sufficient quantities to cool them to match historic temperatures. Then, since such aerosols are much easier to eliminate as combustion products than is CO2, they assume these aerosols go away in the future, allowing their models to produce enormous amounts of future warming.

Specifically, when he looked at the climate models used by the IPCC, Kiehl found they all used very different assumptions for aerosol cooling and, most significantly, he found that each of these varying assumptions were exactly what was required to combine with that model's unique sensitivity assumptions to reproduce historical temperatures. In my terminology, aerosol cooling was the plug variable.

When I was active doing computer models for markets and economics, we used the term "plug variable." Now, I think "goal-seeking" is the hip word, but it is all the same phenomenon.

Postscript, An example with the partisans reversed: It strikes me that in our tribalized political culture my having criticised models by a) climate alarmists and b) the Obama Administration might cause the point to be lost on the more defensive members of the Left side of the political spectrum. So let's discuss a hypothetical with the parties reversed. Let's say that a group of economists working for the Trump Administration came out and said that half of the 4% economic growth we were experiencing (or whatever the exact number was) was due to actions taken by the Trump Administration and the Republican Congress. I can assure you they would have a sophisticated computer model that would spit out this result -- there would be a counterfactual model of "with Hillary" that had 2% growth compared to the actual 4% actual under Trump.

Would you believe this? After all, its science. There is a model. Made by experts ("top men" as they say in Raiders of the Lost Ark). Do would you buy it? NO! I sure would not. No way. For the same reasons that we shouldn't uncritically buy into any of the other model results discussed -- they are building counterfactuals of a complex process we do not fully understand and which cannot be tested or verified in any way. Just because someone has embodied their imperfect understanding, or worse their pre-existing pet answer, into code does not make it science. But I guarantee you have nodded your head or even quoted the results from models that likely were not a bit better than the imaginary Trump model above.